Who Invented the First Computer?

Do you think Bill Gates invented the first computer? The reality is that computers were on the scene well before you think they were. The first computer was invented by Charles Babbage in 1838.

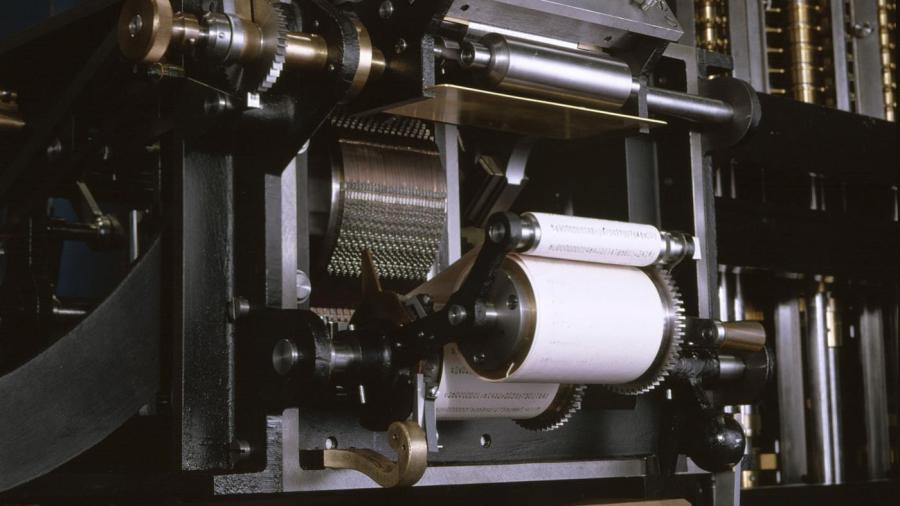

Babbage developed a mechanical calculator that had a simple storage mechanism and called it the Difference Engine in 1822. The device used punch cards to input instructions to the device steam engine as its source of power. The machine worked well to compute different numbers and make copies of the results that people could have in-hand. Although the device performed calculations well and even provided hard copies of its output, Charles Babbage lacked funds to develop a fully functional and complete device. Together with Ada Lovelace, considered by many as the first computer programmer, Babbage later developed another general purpose device and called it the Analytical Engine. This more advanced device had memory, flow control and an Arithmetic and Logic Unit. For his contributions to early computer science, he’s often hailed as the father of computers.

What Happened to the Difference Machine? Babbage’s lack of funds shelved the difference machine until 1991 when London’s Science Museum built it from Babbage’s plans. The device worked, but it could only perform the most basic calculations. Additionally, it couldn’t check the results, and it couldn’t make any changes. The plans for the Analytical Engine weren’t complete. The inventor’s plans were a work in progress. If scientists are ever able to complete the plans and build the Analytical Engine, the machine may indeed prove the assertion that Babbage is the father of modern computing.

What About Alan Turing? Alan Turing was a Ph.D. student attending Princeton University in 1936 when he published, On Computable Numbers, with an application to the Entscheidungsproblem. This paper is widely credited as the corner stone of computer science. Turing’s hypothetical problem solving machine could solve any and every problem for which people could write a program. At the time, people performed the actions of computers. They did all the engineering calculations and compiled tables, all of which was at an extreme shortage during the early days of World War II. With the need for military calculations at an all time high, the United States built a 50-foot machine, the Harvard Mark 1, which could do complex calculations that took people hours in a matter of seconds. Turing designed a machine, the Bombe, that enabled the British to read the traffic coming from the German Navy, a contribution that Eisenhower later said may have shaved two years off the war. Turing continued his involvement in these very early computers, and he later envisioned computers becoming more powerful with artificial intelligence (AI) becoming a reality. To help distinguish between computer and AI, he developed the Turing Test.

The Dawn of the PC The earliest computers were huge. An entire team of specialists were devoted to keeping them running. After World War II, one of the most important inventions came to market. The microprocessor, which was developed by Ted Hoff, an Intel engineer in 1971, paved the way for those huge early computers to shrink down. And the personal computer (PC) was soon born. The Altair was a PC kit debuted in 1974 from company Micro Instrumentation and Telemetry Systems. It wasn’t until Harvard students Bill Gates and Paul G. Allen invented BASIC programming that the PC became easier to use. The duo took the money they earned from the endeavor to form their own company: Microsoft.